WHAT IS INTERNET?

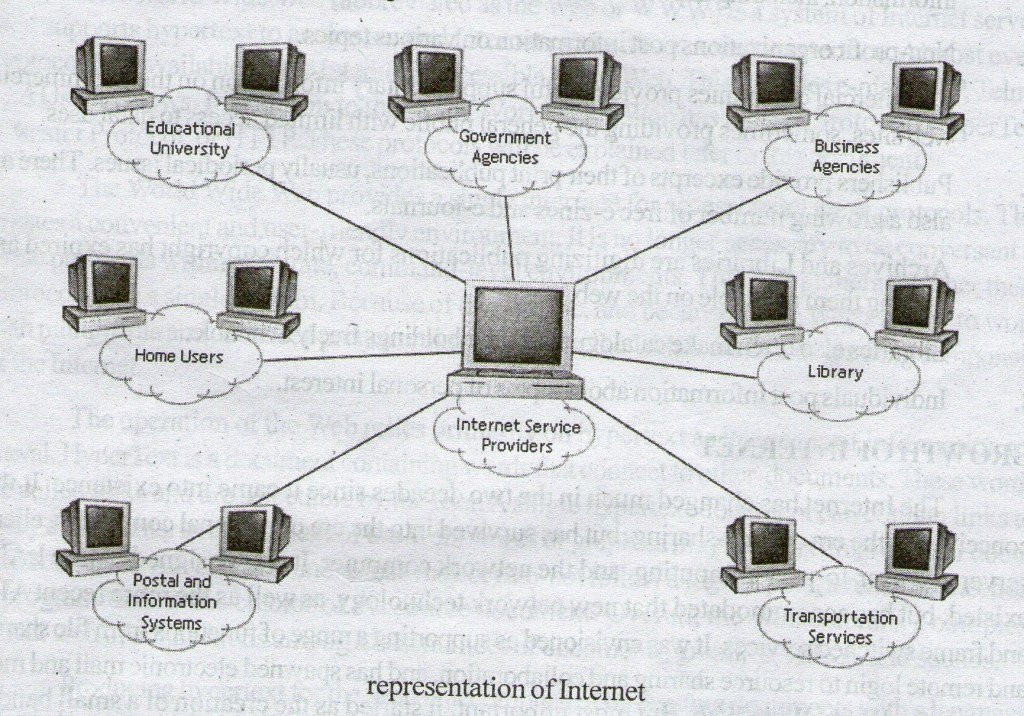

The Internet is a computer network made up of thousands of networks worldwide. No one knows exactly how many computers are connected to the Internet, however, that these number in the millions.

No one is in charge of the Internet. There are organizations which develop technical aspects of this network and set standards for creating applications on it, but no governing body is in control. The Internet backbone, through which Internet traffic flows, is owned by private companies.

All computers on the Internet communicate with one another using the Transmission Control Protocol/Internet Protocol suite, abbreviated to TCP/IP. Computers on the Internet use a client/server architecture. This means that the remote server machine provides files and services to the user’s local client machine. Software can be installed on a client computer to take advantage of the latest access technology.

An Internet user has access to a wide variety of services: electronic mail, file transfer, vast information resources, interest group membership, interactive collaboration, multimedia displays, real-time broadcasting, shopping opportunities, breaking news, and much more.

The Internet consists primarily of a variety of access protocols. Many of these protocols feature programs that allow users to search for and retrieve material made available by the protocol.

It’s a collection of networks- a network of networks-computers sharing information via a common set of networking and software protocols.

HISTORY AND OVERVIEW OF THE INTERNET

1969 – Birth of a Network

The Internet as we know it today, in the mid – 1990s, traces it origins back to a Defence Department project in 1969. The subject of the project was wartime digital communications. At that time the telephone system was about the only theater – scale communications system in use. A major problem had been identified in its design – its dependence on switching stations that could be targeted during an attack. Would it be possible to design a network that could quickly reroute digital traffic around failed nodes? A possible solution had been identified in theory. That was to build a “web” of datagram network, called a “catenet”, and use dynamic routing protocols to constantly adjust the flow of traffic through the catenet. The Defence Advanced Research Projects Agency (SARPA) launched the DARPA Internet Program.

1970 – Infancy

DARPA Internet, largely the plaything of academic and military researchers, spent more than a decade in relative obscurity. As Vietnam, Watergate, the Oil Crisis, and the Iranian Hostage Crisis rolled over the nation, several Internet research teams preceded through a gradual evolution of protocols. In 1975, DARPA declared the project a success and handed its management over to the Defense Communications Agency. Several of today’s key protocols (including IP and TCP) were stable by 1980, and adopted throughout ARPANET by 1983.

Mid 1980 – The Research Net

Let’s outline key features, circa- 1983, of what was then called ARPANET. A small computer was a PDP-11/45, and a PDP-11/45 does not fit on your desk. Some sites had a hundred computers attached to the Internet. Most had a dozen or so, probably with something like a VAX doing most of the work – mail, news, EGP routing. Users did their work using DEC VT- 100 terminals. FORTRAN was the word of the day. Few companies had Internet access, relying instead on SNA and IBN mainframes. Rather, the Internet community was dominated by universities and military research sites. It’s most popular service was the rapid email it made possible with distant colleagues. In August 1983, there were 562 registered ARIANET hosts (RFC 1296).

UNIX deserves at least an honorable mention, since almost all the initial Internet protocols were developed first for UNIX, largely due to the availability of kernel source (for a price) and the relative ease of implementation (relative to things like VMS or MVS). The University of California at Berkeley (UCB) deserves special mention, because their Computer Science Research Group (CSRG) developed the BSD variants of AT&T’s UNIX operating system. BSD UNIX and its derivatives would become the most common Internet programming platform.

Many key features of the Internet were already in place, including the IP and TCP protocols; ARPANET was fundamentally unreliable in nature, as the Internet is still today. This principle of unreliable delivery means that the Internet only makes a best-effort attempt to deliver packets. The network can drop a packet without any notification to sender or receiver. Remember, the Internet was designed for military survivability. The software running on either end must be prepared to recognize data loss, retransmitting data as often as necessary to achieve its ultimate delivery.

Late 1980 – The PC Revolution

Driven largely by the development of the PC and LAN technology, subnetting was standardized in 1985 when RFC 950 was released. LAN technology made the idea of a “catenet” feasible – an internet work of networks. Subnetting opened the possibilities of interconnecting LANs with WANs.

The National Science Foundation (NSF) started the Supercomputer Centers program in 1986. Until then, supercomputers such as Cray’s were largely the playthings of large, well-funded universities and military research centers. NSF’s idea was to make supercomputer resources available to those of more modest means by constructing five supercomputer centers around the country and building a network linking them with potential users. NSF decided to base their network on the Internet protocols, and NSFNET was born . For the next decade, NSFNET would be the core of the U.S. Internet, until its privatization and ultimate retirement in 1995.

Domain naming was stable by 1987 when RFC 1034 was released. Until then, hostnames were mapped to IP address using static tables, but the Internet’s exponential growth had made this practice infeasible.

In the late 1980s, important advances related poor network performance with poor TCP performance, and a string of papers by the likes of Nagle and Van Jacobson (RFC 896, RFC 1072, RFC 1144, RFC 1323) present key insights into TCP performance. The 1987 Internet Worm was the largest security failure in the history of the Internet. More information can be found in RFC 1135. All things considered, it could happen again.

Early 1990 – Address Exhaustion and the Web

In the early 90s, the first address exhaustion crisis hit the Internet technical community. The present solution, CIDR, will sustain the Internet for a few more years by making more efficient use of IP’s existing 32-bit address space. For a more lasting solution, IETF is looking at IPv6 and its 128-bit address space, but CIDR is here to stay.

Crisis aside, the World Wide Web (WWW) has been one of Internet’s most exciting recent developments. The idea of hypertext has been around for more than a decade, but in 1989 a team at the European Center for Particle Research (CERN) in Switzerland developed a set of protocols for transferring hypertext via the Internet. In the early 1990s it was enhanced by a team at the National center for Supercomputing Applications (NCSA) at the University of Illinois – one of NSF’s supercomputer centers. The result was NCSA Mosaic, a graphical, point-and-click hypertext browser that made Internet easy. The resulting explosion in “Web sites” drove the Internet into the public eye.

Mid 1990s – The New Internet

Of at least as much interest as Internet’s technical progress in the 1990s has been its sociological progress. It has already become part of the national vocabulary, and seems headed for even greater prominence. It has been accepted by the business community, with a resulting explosion of service providers, consultants, books, and TV coverage. It has given birth to the Free Software Movement.

The Free Software Movement owes much to bulletin board systems, but really came into its own on the Internet, due to a combination of forces. The public nature of the Internet’s early funding ensured that much of its networking software was non – proprietary. The emergence of anonymous FTP sites provided a distribution mechanism that almost anyone could use. Network newsgroups and mailing lists offered an open communication medium. Last but not least were individualists like Richard Stallman, who wrote EMACS, launched the GNU Project and founded the Free Software Foundation. In the 1990s, Linus Torvalds wrote Linux, the popular (and free) UNIX clone operating system.

The explosion of capitalist conservatism, combined with a growing awareness of Internet’s business value, has led to major changes in the Internet community. Many of them have not been for the good.

First, there seems to be a growing departure from Internet’s history of open protocols, published as RFCs. Many new protocols are being developed in an increasingly proprietary manner. IGRP, a trademark of Cisco Systems, has the dubious distinction as the most successful proprietary Internet routing protocol, capable only of operation between Cisco routers. Other protocols, such as BGP, are published as RFCs, but with important operational details omitted.The notoriously mis-named Open Software Foundation have introduced a whole suite of “open” protocols whose specifications are available – for a price – and not on the net. I am forced to wonder: 1) why do we need a new RPC? and 2)why won’t OSF tell us how it works?

People forget that businesses have tried to run digital communications networks in the past. IBM and DEC both developed proprietary networking schemes that only ran on their hardware. Several information providers did very well for themselves in the 80s, including LEXIS/NEXIS, Dialog, and Dow Jones. Public data networks were constructed by companies like Tymnet and run into every major US city. CompuServe and others built large bulletin board – like systems. Many of these services still offer a quality and depth of coverage unparalleled on the Internet (examine Dialog if you are skeptical of this claim). But none of them offered nudie GIFs that anyone could download. None of them let you read through the RFCs and then write a Perl script to tweak the one little thing you needed to adjust. None of them gave birth to a Free Software Movement. None of them caught people’s imagination.

The very existence of the Free Software Movement is part of the Internet saga, because free software would not exist without the net. “Movements” tend to arise when progress offers us new freedoms and we find new ways to explore and, sometimes, to exploit them. The Free Software Movement has offered what would be unimaginable when the Internet was formed – games, editors, windowing systems, compilers, networking software, and even entire operating systems available for anyone who wants them, without licensing fees, with complete source code, and all you need is Internet access. It also offers challenges, forcing us to ask what changes are needed in our society to support these new freedoms that have touched so many people. And it offers chances at exploitation, from the businesses using free software development platforms for commercial code, to the Internet Worm and security risks of open systems.

People wonder whether progress is better served through government funding or private industry. The Internet defies the popular wisdom of “business is better”. Both business and government tried to build large data communication networks in the 1980s. Business depended on good market decisions; the government researchers based their system on openness, imagination and freedom. Business failed; Internet succeeded. Our reward has been its commercialization.

For the next few years, the Internet will almost certainly be content – driven. Although new protocols are always under development, we have barely begun to explore the potential of just the existing ones. Chief among these is these Word Wide Web, with its potential for simple on – line access to almost any information imaginable. Yet even as the Internet intrudes into society, remember that over the last two decades “The Net” has developed a culture o fits own, one that may collide with society’s. Already business is making its pitch to dominate the Internet. Already Congress has deemed it necessary to regulate the Web.

WHO OWNS THE INTERNET?

No one actually owns the internet, and no single person or organisation controls the internet in its entirety. More of a concept than an actual tangible entity, the internet relies on a physical infrastructure that connects networks to other networks. There are many organisations, corporations, governments, schools, private citizens and service providers that own pieces of infrastructure, but there is no one body that owns it all. There are however, organisations that oversee and standardise what happens on internet and assigns IP addresses and domain names, such as the national science foundation, the internet engineering task force, icann, internic and internet architecture board.

WHO PUTS INFORMATION THE INTERNET?

Individuals and organizations make information available through the internet for a variety of reasons. Some of this information is freely available to the general public, while some of it is restricted to a particular user community. The following are some of the people who put information on the internet, and the types of information they make freely available.

- Governments often have a mandate to make information freely available to their citizens, and are publishing a wealth of statistical and other information on the web.

- University researchers, educators and students make available various types of academic information, including unpublished articles.

- Non- profit organizations post information on various topics.

- Commercial companies provide useful supplementary information on their commercial web sites, sometimes providing the general public with limited access to databases.

- Publishers provide excerpts of their print publications, usually periodical issues. There are also a growing number of free e-zines and e-journals.

- Archives and Libraries are digitizing publications for which copyright has expired and making them available on the web.

- Libraries also often make catalogues of their holdings freely available.

- Individuals post information about topics of personal interest.

GROWTH OF INTERNET

The Internet has changed much in the two decades since it came into existence. It was conceived in the era of time-sharing, but has survived intp the era of personal computers, client server and peer-to-peer computing, and the network computer. It was designed before LAN’s existed, but has accommodated that new network technology, as well as the more recent ATM and frame switched services. It was envisioned as supporting a range of functions from file sharing and remote login to resource sharing and collaboration, and has spawned electronic mail and more recently the World Wide Web. But most important, it started as the creation of a small band of dedicated researchers, and has grown to be a commercial success with billions of dollars of annual investment.

One should not conclude that the Internet has now finished changing. The Internet, although a network in name and geography, is a creature of the computer, not the traditional network of the telephone or television industry. It will, indeed it must, continue to change and evolve at the speed of the computer industry if it is to remain relevant. It is now changing to provide such new services as real time transport, in order to support, for example, audio and video streams. The availability of pervasive networking (i.e., the Internet) along with powerful affordable computing and communications in portable form (i.e., laptop computers, two-way pagers, PDA’s, cellular phones), is making possible a new paradigm of nomadic computing and communications.

This evolution will bring us new applications – Internet telephone and, slightly further out, Internet television. It is evolving to permit more sophisticated forms of pricing and cost recovery, a perhaps painful requirement in this commercial world. It is changing to accommodate yet another generation of underlying network technologies with different characteristics and requirements, from broadband residential access to satellites. New modes of access and new forms of service will spawn new applications, which in turn will drive further evolution of the net itself.

COMPONENTS OF THE INTERNET

WORLD WIDE WEB

The World Wide Web (abbreviated as the Web or WWW) is a system of Internet servers that supports hypertext to access several Internet protocols on a single interface. Almost every protocol type available on the Internet is accessible on the Web. This includes e-mail, FTP, Telnet, and Usenet News. In addition to these, the World Wide Web has its own protocol: Hyper Text Transfer Protocol, or HTTP. These protocols will be explained later in this document.

The World Wide Web provides a single interface for accessing all these protocols. This creates a convenient and user – friendly environment. It is no longer necessary to be conversant in these protocols within separate, command – level environments. The Web gathers together these protocols into a single system. Because of this feature, and because of the Web’s ability to work with multimedia and advanced programming language, the Web is the fastest- growing component of the Internet.

The operation of the Web relies primarily on hypertext as its means of information retrieval. Hyper Text is a document containing words that connect to other documents. These words are called links and are selectable by the user. A single hypertext document can contain links to many documents. In the context of the Web, words or graphics may serve as links to other documents, images, video, and sound. Links may or may not follow a logical path, as each connection is programmed by the creator of the source document. Overall, the Web contains a complex virtual web of connections among a vast number of documents, graphics, videos, and sounds.

Producing hypertext for the Web is accomplished by creating documents with a language called Hyper Text Markup Language, or HTML. With HTML, tags are placed within the text to accomplish document formatting, visual features such as font size, italics and bold, and the creation of hypertext links. Graphics and multimedia may also be incorporated into an HTML document. HTML is an evolving language, with new tags being added as each upgrade of the language is developed and released. The World Wide Web Consortium (W3C), led by Web founder Tim Berners-Lee, coordinates the efforts of standardizing HTML. The W3C now calls the language XHTML and considers it to be an application of the XML language standard.

The World Wide Web consists of files, called pages or home pages, containing links to documents and resources throughout the Internet.

The Web provides a vast array of experiences including multimedia presentations, real-time collaboration, interactive pages, radio and television broadcasts, and the automatic “push” of information to a client computer. Programming languages such as Java, JavaScript, Visual Basic, Cold Fusion and XML are extending the capabilities of the Web. A growing amount of information on the Web is served dynamically from content stored in databases. The Web is therefore not a fixed entity, but one that is in a constant state of development and flux.

Electronic mail, or e-mail, allows computer users locally and worldwide to exchange messages. Each user of e-mail has a mailbox address to which messages are sent. Messages sent though e-mail can arrive within a matter of seconds.

A powerful aspect of e-mail is the option to send electronic files to a person’s e-mail address. Non – ASCII files, known as binary files, may be attached to e-mail messages. These files are referred to as MIME attachments. MIME stands for Multimedia Internet Mail Extension, and was developed to help e-mail software handle a variety of file types. For example, a document created in Microsoft Outlook, offer the ability to read files written in HTML, which is itself a MIME type.

TELNET

Telnet is a program that allows you to log into computers on the Internet and use online databases, library catalogs, chat services, and more. There are no graphics in Telnet sessions, just text. To Telnet to a computer, you must know its address. This can consist of words (locis.loc.gov) or numbers (140.147.254.3). Some services require you to connect to a specific port on the remote computer. In this case, type the port number after the Internet address. Example: telnet nri.reston.va. Us 185.

Telnet is available on the World Wide Web. Probably the most common Web-based resources available through Telnet have been library catalogs, though most catalogs have since migrated to the Web. A link to a Telnet program must be installed on your local computer and configured to your Web browser in order to work.

With the increasing popularity of the Web, Telnet has become less frequently used as a means of access to information on the Internet.

FTP

FTP stands for File Transfer Protocol. This is both a program and the method used to transfer files between computers. Anonymous FTP is an option that allows users to transfer files from thousands of host computers on the Internet to their personal computer account. FTP sites contain books, articles, software, games, images, sounds, multimedia, course work, data sets, and more.

If your computer is directly connected to the Internet via an Ethernet cable, you can use one of several PC software programs, such as WS_FTP for Windows, to conduct a file transfer.

FTP transfers can be performed on the World Wide Web without the need for special software. In this case, the Web browser will suffice. Whenever you download software from a Wed site to your local machine, you are using FTP. You can also retrieve FTP files via search engines such as FtpFind, located at http://WWW.ftpfind.com/THis option is easiest because you do not need to know FTP program commands.

E-MAIL DISCUSSION GROUPS

One of the benefits of the Internet is the opportunity it offers to people worldwide to communicate via e-mail. The Internet is home to a large community of individuals who carry out active discussion organized around topic-oriented forums distributed by e-mail. These are administered by software programs. Probably the most common program is the listserv.

A great variety of topics are covered by listservs, many of them academic in nature. When you subscribe to a listserv, messages from other subscribers are automatically sent to your electronic mailbox. You subscribe to a listserv by sending an e-mail message to a computer program called a list server. List servers are located on computer networks throughout the world. This program handles subscription information and distributes messages to and from subscribers. You must have a e-mail account to participate in a listserv discussion group. Visit Tile.net at http://tile.net/ to see and example of a site that offers a searchable collection of e-mail discussion groups.

Majordomo and Listproc are two other programs that administer e-mail discussion groups. The commands for subscribing to and managing your list memberships are similar to those of listserv.

USENET NEWS

Usenet News is a global electronic bulletin board system in which millions of computer users exchange information on a vast range of topics. The major difference between Usenet News and e-mail discussion groups is the fact that Usenet messages are stored on central computers, and users must connect to these computers to read or download the messages posted to these groups. This is distinct from e-mail distribution, in which messages arrive in the electronic mail-boxes of each list member.

Usenet itself is a set of machines that exchanges messages, or articles, from Usenet discussion forums, called newsgroups. Usenet administrators control their own sites, and decide which (if any) newsgroups to sponsor and which remote newsgroups to allow into the system.

There are thousands of Usenet newsgroups in existence. While many are academic in nature, numerous newsgroups are organized around recreational topics. Much serious computer related work takes place in Usenet discussions. A small number of e-mail discussion groups also exist as Usenet newsgroups.

The Usenet news feed can be read by a variety of newsreader software programs. For example, the Netscape suite comes with a newsreader program called Messenger. Newsreaders are also available as standalone products.

FAQ, RFC, FYI

FAQ stands for Frequently Asked Questions. These are periodic postings to Usenet newsgroups that contain a wealth of information related to the topic of the newsgroup. Many FAQs are quite extensive. FAQs are available by subscribing to individual Usenet newsgroups. A Web-based collection of FAQ resources has been collected by The Internet FAQ Consortium and is available at http://www.faqs.org/

RFC stands for Request for Comments. These are documents created by and distributed to the Internet community to help define the nuts and bolts of the Internet. They contain both technical specifications and general information.

FYI stands for For Your Information. These notes are a subset of RFCs and contain information of interest to new Internet users.

Links to indexes of all three of these information resources are available on the University Libraries Web site at http://library.albany.edu/internet/reference/faqs.html

CHAT & INSTANT MESSAGING

Chat programs allow users on the Internet to communicate with each other by typing in real time. They are sometimes included as a feature of a Web site, where users can log into the “chat room” to exchange comments and information about the topics addressed on the site. Chat may take other, more wide-ranging forms. For example, America Online is well known for sponsoring a number of topical chat rooms.

Internet Relay Chat (IRC) is a service through which participants can communicate to each other on hundreds of channels. These channels are usually based on specific topics. While many topics are frivolous, substantive conversations are also taking place. To access IRC, you must use an IRC software program.

A variation of chat is the phenomenon of instant messenging. With instant messenging, a user on the Web can contact another user currently logged in and type a conversation. Most famous is America Online’s Instant Messenger. ICQ, MSN and Yahoo also offer chat programs.

Other types of real-time communication are addressed in the tutorial Understanding the World Wide Web.

COMMON WEB TERMINOLOGY

bps (bits per second): A measurement of the speed at which data travels from one place to another. A 57,600 bps modem can theoretically transmit about 57,600 bits of data per second.

client: A program that requests services from other programs or computers that are functioning as servers or hosts.

DNS (Domain Name Service): DNS servers translate symbolic machine names (such as doeace.org) into numerical addresses (128.223.142.14).

Email (electronic mail): Messages sent and received via a computer network.

FAQ (frequently asked questions): A document that answers common questions about a particular subject.

Flame: A “flame” usually refers to any message or article that contains strong criticism, usually irrational or highly emotional

ftp (file transfer protocol): A way to transfer files from one computer to another via the Internet. Many sites on the Internet have repositories of software and files that you can download using an ftp client like Fetch or WS_FTP.

home page: The main or leading web page of an organization’s or individual’s web site.

host: A computer that provides services to other client computers on a network. On the Internet a single computer often provides multiple host functions, such as processing email, serving web pages, and running applications

HTML (Hyper Text Markup Language) : The language of the World Wide Web…a set of codes that tells a computer how to display the text, graphics, and other objects that comprise a web page. The central functional element of HTML is the Hyper Text link, which is a word or picture you can click on to retrieve another web page and display it on your computer screen.

IMAP (Internet Message Access Protocol): A popular method used by programs like Outlook, Eudora, and Netscape to retrieve email from an email server.

IP address: A computer’s unique Internet address, which usually looks like this: 128.223.142.14. Most computers also have a domain name assigned to them, which represents cryptic IP addresses with words that are easier to remember (e.g.,gladstone.uoregon.edu). mailing list. An email-based forum for discussing a particular topic. Mailing lists are administrated by a central program that distributes messages to all participants.

MIME (Multipurpose Internet Mail Extensions): A system for encoding binary data so it can be included with text messages sent across the Internet. Email programs often use MIME to encode attachments.

network: Two or more computing devices connected together by wiring, fiber optic cable, wireless circuits, or other means. The Internet is a network that connects thousands of computer networks.

POP (Post Office Protocol): An older method used by programs like Eudora or Netscape to retrieve email from a mail server.

protocol: A precise definition of how computers interact with one another on a network. In order for the Internet to work reliably, participants agree to set up their systems in accordance with a specific set of protocols, ensuring compatibility between systems.

server: A computer, or application that provides files, data, or some other central body of in formation to multiple client computers by means of a network.

ssh: Secure Shell software that encrypts communications over the Internet.

TCP/IP (Transmission Control Protocol/Internet Protocol): These are two of the main protocols of the Internet. In order for a computer to connect to the Internet, it must have some kind of TCP/IP communication software installed on it.

Telnet: A way for users to create an unencrypted terminal session with a remote system.

USENET: A worldwide collection of discussion groups that use the Internet to transfer tens of thousands of messages among a network of servers set up at sites around the world.

URL (Uniform Resource Locator): A fancy term for the address of a World Wide Web page or other resource.

World Wide Web: A system of linked servers that distribute text, graphics, and multimedia information to users all over the world.

GUIDELINES FOR INTERNET COMMUNICATION

Write concise messages:

There is a large amount of messages that are transmitted on the internet everyday. To help reduce the amount of traffic on the Internet and ease the load of correspondence on the recipients, you must keep the length of your messages to a minimum.

Compose relevant responses:

When replying to message, only include the sections of the previous message that are relevant to your response. You can use some of the conventions or abbreviations of email communication such as FYI (for your information), BTW (by the way), and 🙂 (a smiley face) for conveying tone and feeling in messages.

Include Return Address Information:

Configure your email client to include the return email address. When signing a message, include alternate modes of communication, such as your telephone number or street address. Most email programs include the capability to create a signature file that automatically inserts your name and other pertinent information at the end of a message.

Make Subject Lines Descriptive:

A message with a generic subject like Information, or lack of a subject line, will often be overlooked over by a recipient who gets a large number of email messages. Use a short descriptive phrase that summarizes your message

When Replying, Reference to the Original Message:

When you are replying to a message, first delete the unnecessary parts of the original mail and retain specific points to which you need to respond. Then insert your response after each related point.

Acknowledge when you receive an Email:

Ensure that you notify the sender that you have received their message.

Check Your Email on a Regular Basis:

Senders of email expect a prompt response. Check your email account every few days to ensure that all of your mails are attended to.

Reply in a Timely Manner:

Process mail and respond to messages immediately. If you fail to check your emails regularly, the large numbers of email will be unmanageable. If you regularly receive a large load of email, you can manage the email by using an add-on utility.

Don’t Send Personal Messages Via Newsgroup or Mailing Lists:

If a message is meant for one individual only, do not send it to them via a newsgroup or list that goes to many recipients. Always look at the address line on the top of your message to make sure you are responding only to those person(s) you wish to receive the message.

Learn to Properly Convey Irony, Sarcasm, and Humor:

Often, a good-natured attempt to be witty can be misunderstood. Use a conversational tone in your messages only when the recipient is familiar with your personal style of communication.

Don’t Publicly Criticize Other Users:

Civil discourse is better than emotive or vulgar communication. In case an issue requires a harsh response, avoid using email. Instead communicate directly with the person.

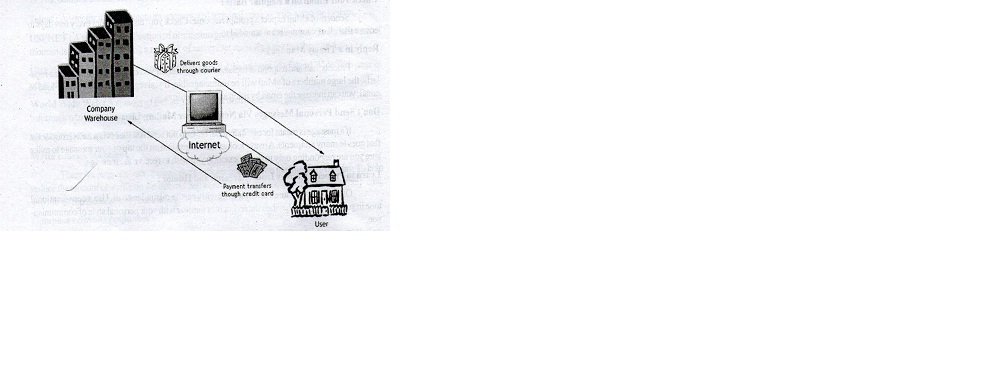

WHAT IS ECOMMERCE?

The basic elements of electronic commerce.

Business includes processes such as recruiting, hiring, and managing, besides buying and selling. The Internet and related technologies provide a medium for businesses to exchange information about processes. For example, the product range and specifications can be disseminated to retailers from a manufacturer.

ECommerce is the abbreviated form of electronic commerce. This term can be defined as business activities that are conducted by using electronic data transmission via the Internet and the World Wide Web.

In B2C transactions, an individual consumer selects and purchases a product or service over the Internet. For example, Amazon.com conducts B2C transactions over the Web. In a business-to-business or B2B transaction, two businesses conduct their transactions via the Internet. B2B transactions account for a much larger proportion of revenue generated directly by than B2C transactions. In addition, business processes that are not directly involved in selling and purchasing account for the majority of business activities on the Internet. For example, a subsidiary company can place purchase orders for its raw materials form a manufacturing company.

B2B transactions over networks pre-date the emergence of the World Wide. Since the 1960s, some businesses have electronic data interchange (EDI) to exchange data related to purchasing and billing between corporate information systems. However, traditional EDI was expensive, and the Internet coupled with the World Wide Web offer a cheaper and universal approach to B2B transactions.

DIFFERENCES BETWEEN ELECTRONIC COMMERCE AND TRADITIONAL COMMERCE

Commerce is the exchange of goods and services and involves several interdependent business processes. A concept of a good or service must be designed or invented. The concept must be produced though manufacturing if it is a good, or implemented if it is a service. Buyers need to be informed about the existence and value of a seller’s goods and service through marketing and promotions. The good or service must be shipped or otherwise transferred from the seller to the buyer. Accounting systems are needed to track expenses and payments.

Underlying each of these business processes is the creation and transmission of information. In traditional commerce, this information was generally exchanged through paper, verbally, or though first-hand experience. For example, if you were interested in buying a television, you would probably visit a dealership and talk to a salesperson. In addition, you can test the features to gain first-hand experience or read a review in a magazine.

In electronic commerce, some or all of this information have been moved to the Internet. In the example of purchasing a new car, you could view information online that you would have to visit a dealership before to access. Because you would not have to spend time traveling to each dealership, you could more easily compare prices form several sources. You might also find reviews online from other people about the cars they own. This might not replace the need for a test drive, but it might assist you in narrowing your selection of what cares to test drive.

WHAT IS EGOVERNANCE

eGovernment is he use of Information and Communications Technologies (ICT’s) to promote more efficient governance by allowing:

- Better delivery of public services

- Improved access to information

- Increased accountability of government to its citizens

The five major goals of eGovernment are:

- Efficient citizen grievance redressal

- Strengthening good governance

- Broadening public participation

- Improving productivity and efficiency of the bureaucracy

- Promoting economic growth

IMPACT OF INTERNET

The Internet an “enabling technology.” When its introduction is sensitive to local values and committed to local capacity – building, it offers important opportunities to:

Open dialogue. Facilitates knowledge sharing, awareness of alternative perspectives, and open exchange by enabling low cost networking. Improve governance. Raises efficiency, transparency, and participatory systems Improve social and human rights conditions. Expands access to better quality education, healthcare, and disaster relief capacity.

Reduce poverty. Opens new opportunities for bypassed groups such as women, the poor, rural populations, and children. Introduce economic opportunities. Develops E-commerce and Information and Communications Technologies (ICT) sector. Improve environmental management. Supports GIS and food security early warning systems Support indigenous knowledge. Documents knowledge within communities.

GROWTH RATE OF INTERNET IN INDIA.

During the first three years of VSNL monopoly, the Internet subscriber base increased slowly. By the end of March 1998, there were almost 1, 40,000 subscribers. At the end of VSNL’s monopoly things changed dramatically. Among other factors, the entry of private players, unlimited and open competition, and the lowering of tariffs, led to the phenomenal surge in the subscriber base growth. Between March ’99 and March’01, the subscriber base grew by more than 200 percent per year, from 2, 80,000 to 30, 00,000.

However, form April 2001; the growth rate started declining on all over India. The current Internet subscriber base in India is 3.3 million and the user base is 16.5 million. The planned projection is 230 million by the end of the year 2007.

WHAT IS INTERNET FRAUD?

The term “Internet fraud” refers to any type of fraudulent scheme that uses a component of the Internet to conduct fraudulent transactions, or to transmit the proceeds of fraud to financial institutions or to others connected with the scheme. The Internet components include chat rooms, email, message boards, or Web sites.

People can access the Internet at all times. This availability of the Internet increases the time in which people can browse the Internet for business decisions, information-searching, and personal interactions. Unfortunately, people who engage in fraud can also access the Internet 24 hours of the day. They can use the capabilities of the Internet such as sending e-mail messages worldwide in seconds or posting Web site information to carry out fraudulent schemes.

WHAT ARE THE MAJOR TYPES OF INTERNET FRAUD?

The fraud schemes of the non-Internet era have been tuned to suit the Internet era and continue to confound consumers and investors. With the explosive growth of the Internet, and ecommerce in particular, online criminals try to present fraudulent schemes in ways that look, as much as possible, like the goods and services that the vast majority of legitimate e-commerce merchants offer. In the process, they not only cause harm to consumers and investors, but also undermine consumer confidence in legitimate e-commerce and the Internet. Here are some of the major types of Internet fraud that law enforcement and regulatory authorities and consumer organizations are seeing:

Auction and Retail Schemes Online. According to the Federal Trade Commission and Internet Fraud Watch, fraudulent schemes appearing on online auction sites are the most frequently reported form of Internet fraud. These schemes, and similar schemes for online retail goods, typically claim to offer high-value items that range from Carter watches to computers to collectibles such as Beanie Babies®, that are likely to attract many consumers. These schemes induce their victims to send money for the promised items, but then deliver nothing or only an item far less valuable than what was promised.

Business Opportunity/”Work-at-Home” Schemes Online. Fraudulent schemes often use the Internet to advertise business opportunities that will allow individuals to earn thousands of dollars a month in “work-at-home” ventures. These schemes typically require the individuals to pay anywhere from $35 to several hundred dollars or more, but fail to deliver the materials or information that would be needed to make the work-at-home opportunity a potentially viable business.

Identity Theft and Fraud. Some Internet fraud schemes also involve identity theft. Identity theft is the wrongful obtaining and using of someone else’s personal data in some way that involves fraud or deception. In one federal prosecution, the defendants allegedly obtained the names and Social Security numbers of U.S. military officers from a Web site, and then used more than 100 of those names and numbers to apply via the Internet for credit cards with a Delaware bank. In another federal prosecution, the defendant allegedly obtained personal data from a federal agency’s Web site, and then used the personal data to submit 14 car loan applications online to a Florida bank.